There’s debate surrounding the term “logical data warehouse.” Some argue that it is a new concept, while others argue that all well-designed data warehouses are logical and so the term is meaningless. This is a key point I’ll address in this post.

I’ll also discuss data warehousing that incorporates some of the technologies and approaches we’ve covered in previous installments of this series (1, 2, 3, 4, 5, 6 ) but with a different architecture that embraces “any data, anywhere.”

So what is a “logical data warehouse?”

Bill Inmon and Barry Devlin provide two oft-quoted definitions of a “data warehouse.” Inmon says “a data warehouse is a subject-oriented, integrated, time-variant and non-volatile collection of data in support of management’s decision-making process.”

Devlin stripped down the definition, saying “a data warehouse is simply a single, complete and consistent store of data obtained from a variety of sources and made available to end users in a way they can understand and use in a business context.”

Although these definitions are widely adopted, there is some disparity in their interpretation. Some insist that such definitions imply a single repository, and thus a limitation.

On the other hand, some argue that a “collection of data” or a “single, complete and consistent store” could just as easily be virtual and therefore not inherently singular. They argue that the language is down to most early implementations only being single, physical data stores due to technology limitations.

Mark Beyer of Gartner is a prominent name in the former, singular repository camp. In 2011, he said “the logical data warehouse (LDW) is a new data management architecture for analytics which combines the strengths of traditional repository warehouses with alternative data management and access strategy,” and the work has since been widely circulated.

So proponents of the “logical data warehouse,” as defined by Mark Beyer, don’t disagree with the value of an integrated collection of data. They just feel that if said collection is managed and accessed as something other than a monolithic, single physical database, then it is something different and should be called a “logical data warehouse” instead of just a “data warehouse.”

As the author of a series of posts about a jargon-filled [data] world, who am I to argue with the introduction of more new jargon?

In fact, I’d be remiss if I didn’t point out that the notion of a logical data warehouse has numerous jargon-rich enabling technologies and synonyms, including Service Oriented Architecture (SOA), Enterprise Services Bus (ESB), Virtualization Layer, and Data Fabric, though the latter term also has other unrelated uses.

So the essence of a logical data warehouse approach is to integrate diverse data assets into a single, integrated virtual data warehouse, without the traditional batch ETL or ELT processes required to copy data into a single, integrated physical data warehouse.

One of the key attractions to proponents of the approach is the avoidance of recurring batch extraction, transformation and loading activities that, typically argued, cause delays and lead to decisions being made based on data that is not as current as it could be.

The idea is to use caching and other technologies to create a virtualization layer that enables information consumers to ask a question as though they were interrogating a single, integrated physical data warehouse and to have the virtualization layer (which together with the data resident in some combination of underlying application systems, IoT data streams, external data sources, blockchains, data lakes, data warehouses and data marts, constitutes the logical data warehouse) respond correctly with more current data and without having to extract, transform and load data into a centralized physical store.

While the moniker may be new, the idea of bringing the query to the data set(s) and then assembling an integrated result is not a new idea. There have been numerous successful implementations in the past, though they often required custom coding and rigorous governance to ensure response times and logical correctness.

Some would argue that such previous implementations were also not at the leading edge of data warehousing in terms of data volume or scope.

What is generating renewed interest in this approach is the continued frustration on the part of numerous stakeholders with delays attributed to ETL/ELT in traditional data warehouse implementations.

When you compound this with the often high costs of large (physical) data warehouse implementations, it’s not hard to see why. Especially if it’s based on MPP hardware, juxtaposed against the promise of some new solutions from vendors like Denodo and Cisco that capitalize on the increasing prevalence of new technologies, such as the cloud and in-memory.

One topic that quickly becomes clear as one learns more about the various logical data warehouse vendor solutions is that metadata is a very important component. However, this shouldn’t be a surprise, as the objective is still to present a single, integrated view to the information consumer.

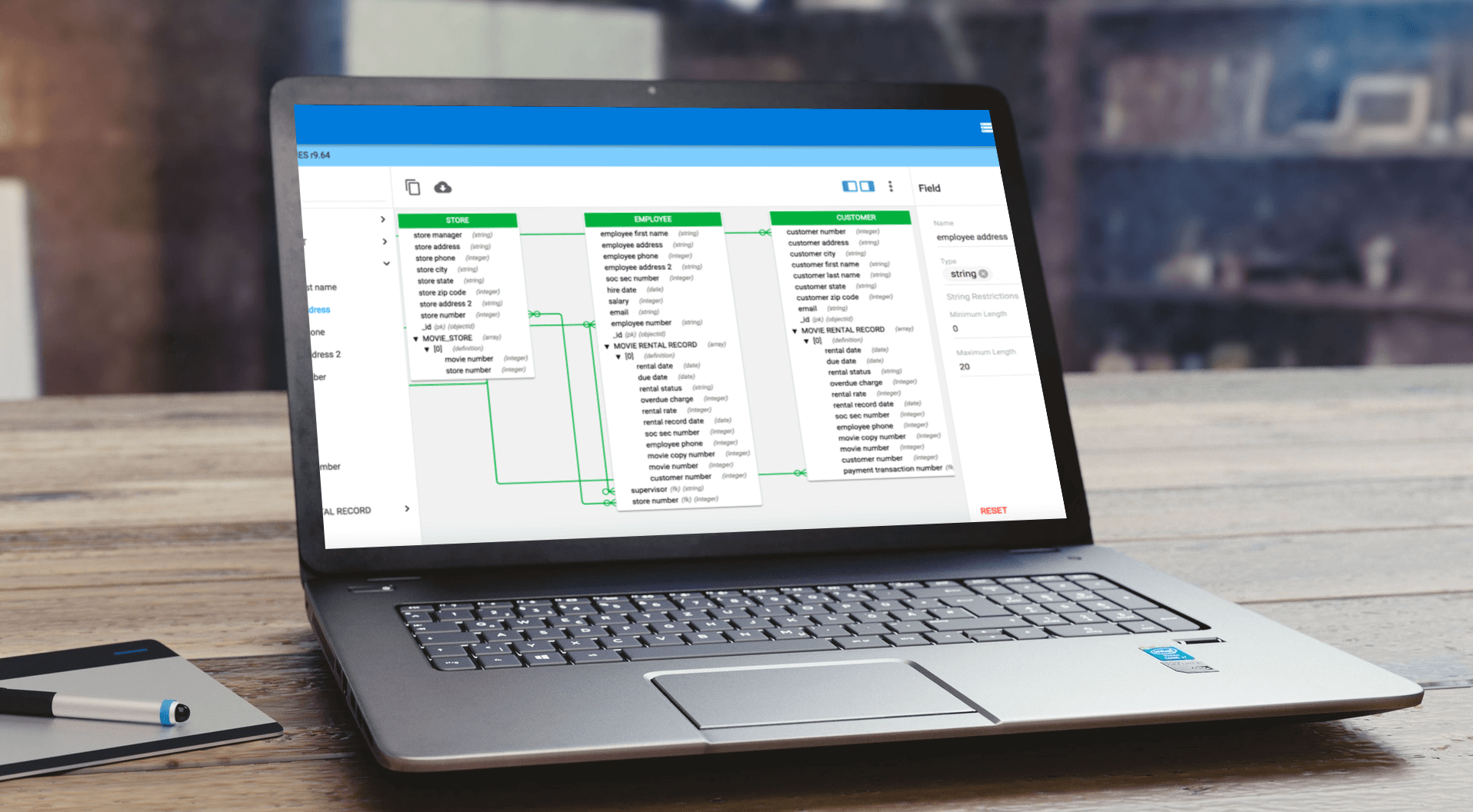

So a well-architected, comprehensive and easily understood data model is as important as ever, both to ensure that information consumers can easily access properly integrated data and because the virtualization technology itself must depend on a properly architected data model to accurately transform an information request into queries to multiple data sources and then correctly synthesize the result sets into an appropriate response to the original information request.

We hope you’ve enjoyed our series, Data Modeling in a Jargon-filled World, learning something from this post or one of the previous posts in the series (1, 2, 3, 4, 5, 6 ).

The underlying theme, as you’ve probably deduced, is that data modeling remains critical in a world in which the volume, variety and velocity of data continue to grow while information consumers find it difficult to synthesize the right data in the right context to help them draw the right conclusions.

We encourage you to read other blog posts on this site by erwin staff members and other guest bloggers and to participate in ongoing events and webinars.

If you’d like to know more about accelerating your data modeling efforts for specific industries, while reducing risk and benefiting from best practices and lessons learned by other similar organizations in your industry, please visit erwin partner ADRM Software.