Emerging technology has always played an important role in business transformation. In the race to collect and analyze data, provide superior customer experiences, and manage resources, new technologies always interest IT and business leaders.

KPMG’s The Changing Landscape of Disruptive Technologies found that today’s businesses are showing the most interest in emerging technology like the Internet of Things (IoT), artificial intelligence (AI) and robotics. Other emerging technologies that are making headlines include natural language processing (NLP) and blockchain.

In many cases, emerging technologies such as these are not fully embedded into business environments. Before they enter production, organizations need to test and pilot their projects to help answer some important questions:

- How do these technologies disrupt?

- How do they provide value?

Enterprise Architecture’s Role in Managing Emerging Technology

Pilot projects that take a small number of incremental steps, with small funding increases along the way, help provide answers to these questions. If the pilot proves successful, it’s then up to the enterprise architecture team to explore what it takes to integrate these technologies into the IT environment.

This is the point where new technologies go from “emerging technologies” to becoming another solution in the stack the organization relies on to create the business outcomes it’s seeking.

One of the easiest, quickest ways to try to pilot and put new technologies into production is to use cloud-based services. All of the major public cloud platform providers have AI and machine learning capabilities.

Integrating new technologies based in the cloud will change the way the enterprise architecture team models the IT environment, but that’s actually a good thing.

Modeling can help organizations understand the complex integrations that bring cloud services into the organization, and help them better understand the service level agreements (SLAs), security requirements and contracts with cloud partners.

When done right, enterprise architecture modeling also will help the organization better understand the value of emerging technology and even cloud migrations that increasingly accompany them. Once again, modeling helps answer important questions, such as:

- Does the model demonstrate the benefits that the business expects from the cloud?

- Do the benefits remain even if some legacy apps and infrastructure need to remain on premise?

- What type of savings do you see if you can’t consolidate enough close an entire data center?

- How does the risk change?

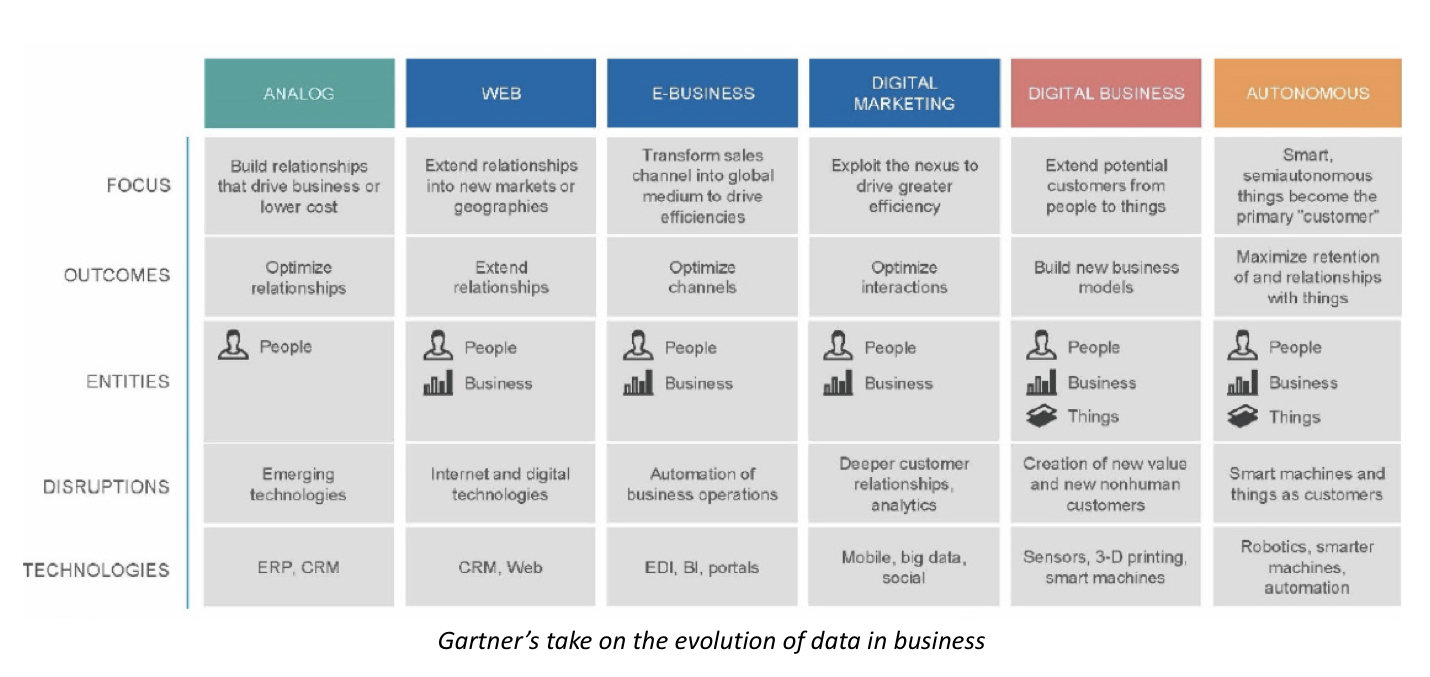

Many of the emerging technologies garnering attention today are on their way to becoming a standard part of the technology stack. But just as the web came before mobility, and mobility came before AI, other technologies will soon follow in their footsteps.

To most efficiently evaluate these technologies and decide if they are right for the business, organizations need to provide visibility to both their enterprise architecture and business process teams so everyone understands how their environment and outcomes will change.

When the enterprise architecture and business process teams use a common platform and model the same data, their results will be more accurate and their collaboration seamless. This will cut significant time off the process of piloting, deploying and seeing results.

Outcomes like more profitable products and better customer experiences are the ultimate business goals. Getting there first is important, but only if everything runs smoothly on the customer side. The disruption of new technologies should take place behind the scenes, after all.

And that’s where investing in pilot programs and enterprise architecture modeling demonstrate value as you put emerging technology to work.