Businesses stand to gain a lot from a unified data platform.

This decade has seen data-driven leaders dominate their respective markets and inspire other organizations across the board to use data to fuel their businesses, leveraging this strategic asset to create more value below the surface. It’s even been dubbed “the new oil,” but data is arguably more valuable than the analogy suggests.

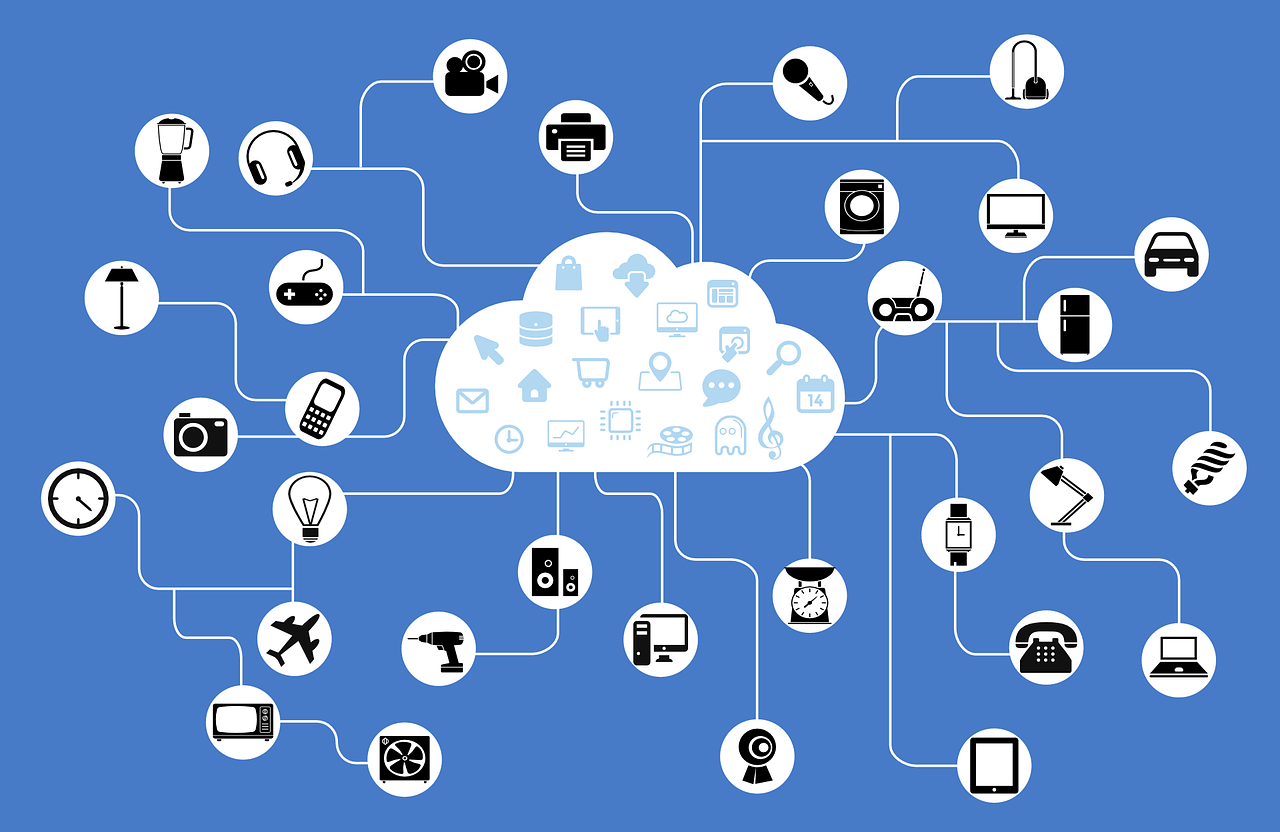

Data governance (DG) is a key component of the data value chain because it connects people, processes and technology as they relate to the creation and use of data. It equips organizations to better deal with increasing data volumes, the variety of data sources, and the speed in which data is processed.

But for an organization to realize and maximize its true data-driven potential, a unified data platform is required. Only then can all data assets be discovered, understood, governed and socialized to produce the desired business outcomes while also reducing data-related risks.

Benefits of a Unified Data Platform

Data governance can’t succeed in a bubble; it has to be connected to the rest of the enterprise. Whether strategic, such as risk and compliance management, or operational, like a centralized help desk, your data governance framework should span and support the entire enterprise and its objectives, which it can’t do from a silo.

Let’s look at some of the benefits of a unified data platform with data governance as the key connection point.

Understand current and future state architecture with business-focused outcomes:

A unified data platform with a single metadata repository connects data governance to the roles, goals strategies and KPIs of the enterprise. Through integrated enterprise architecture modeling, organizations can capture, analyze and incorporate the structure and priorities of the enterprise and related initiatives.

This capability allows you to plan, align, deploy and communicate a high-impact data governance framework and roadmap that sets manageable expectations and measures success with metrics important to the business.

Document capabilities and processes and understand critical paths:

A unified data platform connects data governance to what you do as a business and the details of how you do it. It enables organizations to document and integrate their business capabilities and operational processes with the critical data that serves them.

It also provides visibility and control by identifying the critical paths that will have the greatest impacts on the business.

Realize the value of your organization’s data:

A unified data platform connects data governance to specific business use cases. The value of data is realized by combining different elements to answer a business question or meet a specific requirement. Conceptual and logical schemas and models provide a much richer understanding of how data is related and combined to drive business value.

Harmonize data governance and data management to drive high-quality deliverables:

A unified data platform connects data governance to the orchestration and preparation of data to drive the business, governing data throughout the entire lifecycle – from creation to consumption.

Governing the data management processes that make data available is of equal importance. By harmonizing the data governance and data management lifecycles, organizations can drive high-quality deliverables that are governed from day one.

Promote a business glossary for unanimous understanding of data terminology:

A unified data platform connects data governance to the language of the business when discussing and describing data. Understanding the terminology and semantic meaning of data from a business perspective is imperative, but most business consumers of data don’t have technical backgrounds.

A business glossary promotes data fluency across the organization and vital collaboration between different stakeholders within the data value chain, ensuring all data-related initiatives are aligned and business-driven.

Instill a culture of personal responsibility for data governance:

A unified data platform is inherently connected to the policies, procedures and business rules that inform and govern the data lifecycle. The centralized management and visibility afforded by linking policies and business rules at every level of the data lifecycle will improve data quality, reduce expensive re-work, and improve the ideation and consumption of data by the business.

Business users will know how to use (and how not to use) data, while technical practitioners will have a clear view of the controls and mechanisms required when building the infrastructure that serves up that data.

Better understand the impact of change:

Data governance should be connected to the use of data across roles, organizations, processes, capabilities, dashboards and applications. Proactive impact analysis is key to efficient and effective data strategy. However, most solutions don’t tell the whole story when it comes to data’s business impact.

By adopting a unified data platform, organizations can extend impact analysis well beyond data stores and data lineage for true visibility into who, what, where and how the impact will be felt, breaking down organizational silos.

Getting the Competitive “EDGE”

The erwin EDGE delivers an “enterprise data governance experience” in which every component of the data value chain is connected.

Now with data mapping, it unifies data preparation, enterprise modeling and data governance to simplify the entire data management and governance lifecycle.

Both IT and the business have access to an accurate, high-quality and real-time data pipeline that fuels regulatory compliance, innovation and transformation initiatives with accurate and actionable insights.