NoSQL database technology is gaining a lot of traction across industry. So what is it, and why is it increasing in use?

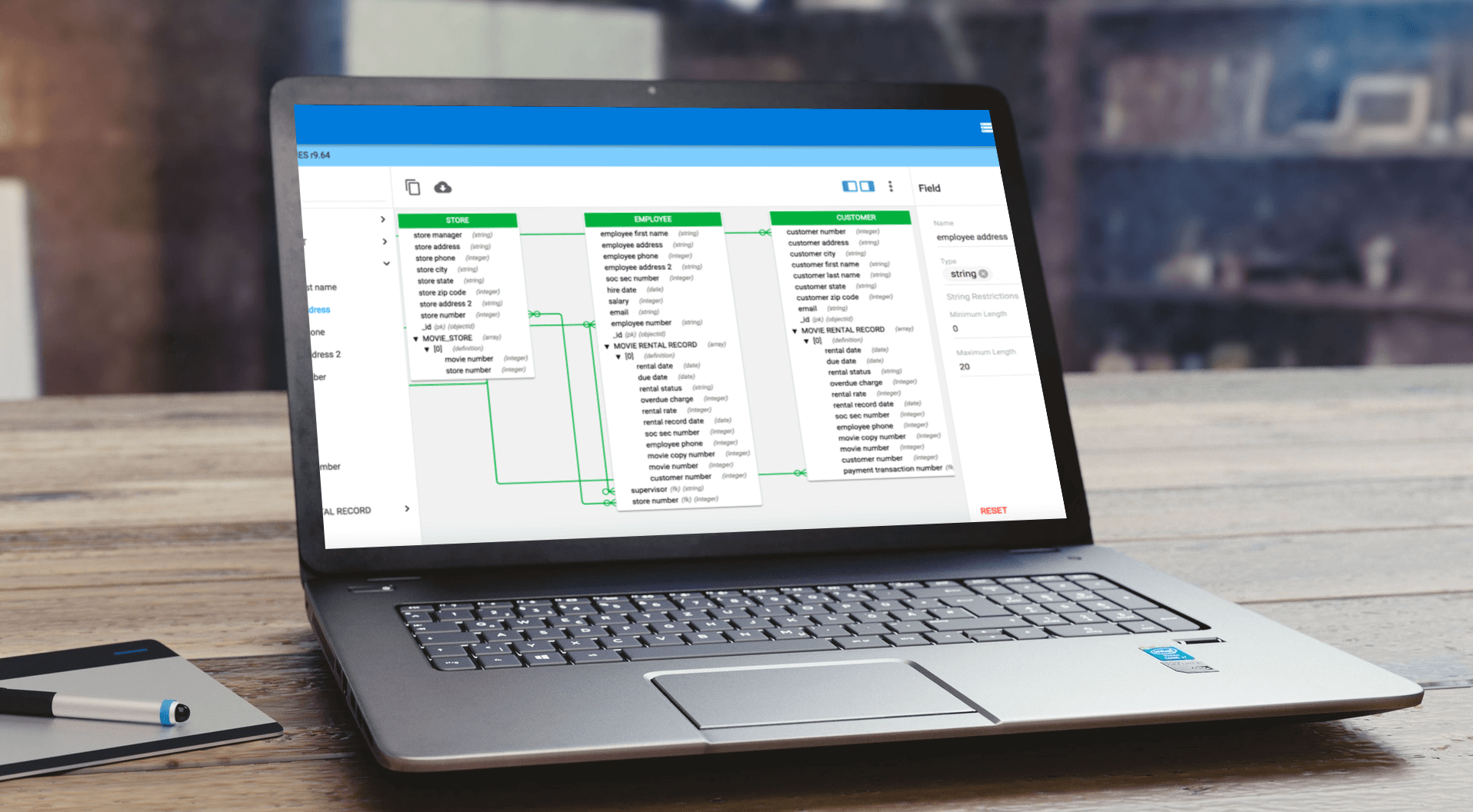

Techopedia defines NoSQL as “a class of database management systems (DBMS) that do not follow all of the rules of a relational DBMS and cannot use traditional SQL to query data.”

The rise of the NoSQL database

The rise of NoSQL can be attributed to the limitations of its predecessor. SQL databases were not conceived with today’s vast amount of data and storage requirements in mind.

Businesses, especially those with digital business models, are choosing to adopt NoSQL to help manage “the three Vs” of Big Data: increased volume, variety and velocity. Velocity in particular is driving NoSQL adoption because of the inevitable bottlenecks of SQL’s sequential data processing.

MongoDB, the fastest-growing supplier of NoSQL databases, notes this when comparing the traditional SQL relational database with the NoSQL database, saying “relational databases were not designed to cope with the scale and agility challenges that face modern applications, nor were they built to take advantage of the commodity storage and processing power available today.”

With all this in mind, we can see why the NoSQL database market is expected to reach $4.2 billion in value by 2020.

What’s next and why?

We can expect the adoption of NoSQL databases to continue growing, in large part because of Big Data’s continued growth.

And analysis indicates that data-driven decision-making improves productivity and profitability by 6%.

Businesses across industry appear to be picking up on this fact. An EY/Nimbus Ninety study found that 81% of companies understand the importance of data for improving efficiency and business performance.

However, understanding the importance of data to modern business isn’t enough. What 100% of organizations need to grasp is that strategic data analysis that produces useful insights has to start from a stable data management platform.

Gartner indicates that 90% of all data is unstructured, highlighting the need for dedicated data modeling efforts, and at a wider level, data management. Businesses can’t leave that 90% on the table because they don’t have the tools to properly manage it.

This is the crux of the Any2 data management approach – being able to manage “any data” from “anywhere.” NoSQL plays an important role in end-to-end data management by helping to accelerate the retrieval and analysis of Big Data.

The improved handling of data velocity is vital to becoming a successful digital business, one that can effectively respond in real time to customers, partners, suppliers and other parties, and profit from these efforts.

In fact, the velocity with which businesses are able to harness and query large volumes of unstructured, structured and semi-structured data in NoSQL databases makes them a critical asset for supporting modern cloud applications and their scale, speed and agile development demands.

For more data advice and best practices, follow us on Twitter, and LinkedIn to stay up to date with the blog.

For a deeper dive into Taking Control of NoSQL Databases, get the FREE eBook below.